🎯 Welcome to Djamgatech Certification Master App 🚀

Djamgatech is your ultimate companion for achieving top certifications across Cloud Computing, Cybersecurity, Finance, Healthcare, and Project Management. Harnessing cutting-edge Artificial Intelligence, our app provides personalized learning experiences with constantly updated quizzes, interactive flashcards, and dynamic concept maps.

Why Choose Djamgatech?

- ✨ AI-Powered Adaptive Learning: Our AI identifies your strengths and weaknesses, tailoring quizzes to maximize your learning efficiency.

- 📈 Real-Time Updates: Our content stays up-to-date with the latest certification exams and industry standards, ensuring you're always prepared.

- 🧠 Interactive Concept Maps: Visualize and connect complex topics, enhancing your understanding and retention.

- 📊 Detailed Progress Tracking: Receive instant feedback and monitor your improvement continuously.

🤖 AI Chatbots to Enhance Your Learning

Djamgatech includes two powerful AI chatbots to enhance your experience:

- 📚 Certification Quiz Chatbot: Interact directly with an intelligent chatbot that quizzes you on your chosen certifications. Answer questions, receive instant corrections, detailed explanations, and authoritative references to solidify your understanding.

- 💼 Career Guidance Chatbot: Leverage our smart career chatbot to find relevant job opportunities tailored to your skills, certifications, and career aspirations. Accelerate your job search and connect seamlessly with ideal job openings.

🌟 Featured Certification Exams:

💻 AWS Certified Solutions Architect

This certification validates expertise in designing and deploying scalable, highly available, and fault-tolerant systems on AWS. It covers key concepts like IAM roles, S3 bucket policies, encryption, and access controls. With Djamgatech’s AI-powered best free AWS quizzes with explanations, users can simulate real-world scenarios, reinforcing their understanding of AWS architecture best practices. Mastering this certification can open doors to roles like Cloud Architect or Solutions Engineer, with high demand in the tech industry.

🔐 CISSP – Certified Information Systems Security Professional

CISSP is a globally recognized certification for cybersecurity professionals, focusing on access control, authentication, authorization, and audit logging. Djamgatech’s AI quizzes help users internalize complex security concepts through targeted questions and concept maps. Earning this certification can lead to roles like Security Consultant or Chief Information Security Officer (CISO), significantly boosting earning potential and career growth. 2024 and 2025 CISSP Certification Practice Quizzes.

📊 CFA – Chartered Financial Analyst

The CFA certification is the gold standard for investment professionals, covering financial analysis, valuation models, and risk management. Djamgatech’s AI-driven quizzes provide practice on critical topics like Discounted Cash Flow (DCF) analysis, helping users master the material efficiently. Passing the CFA exams can lead to prestigious roles like Portfolio Manager or Financial Analyst, enhancing credibility in the finance industry.

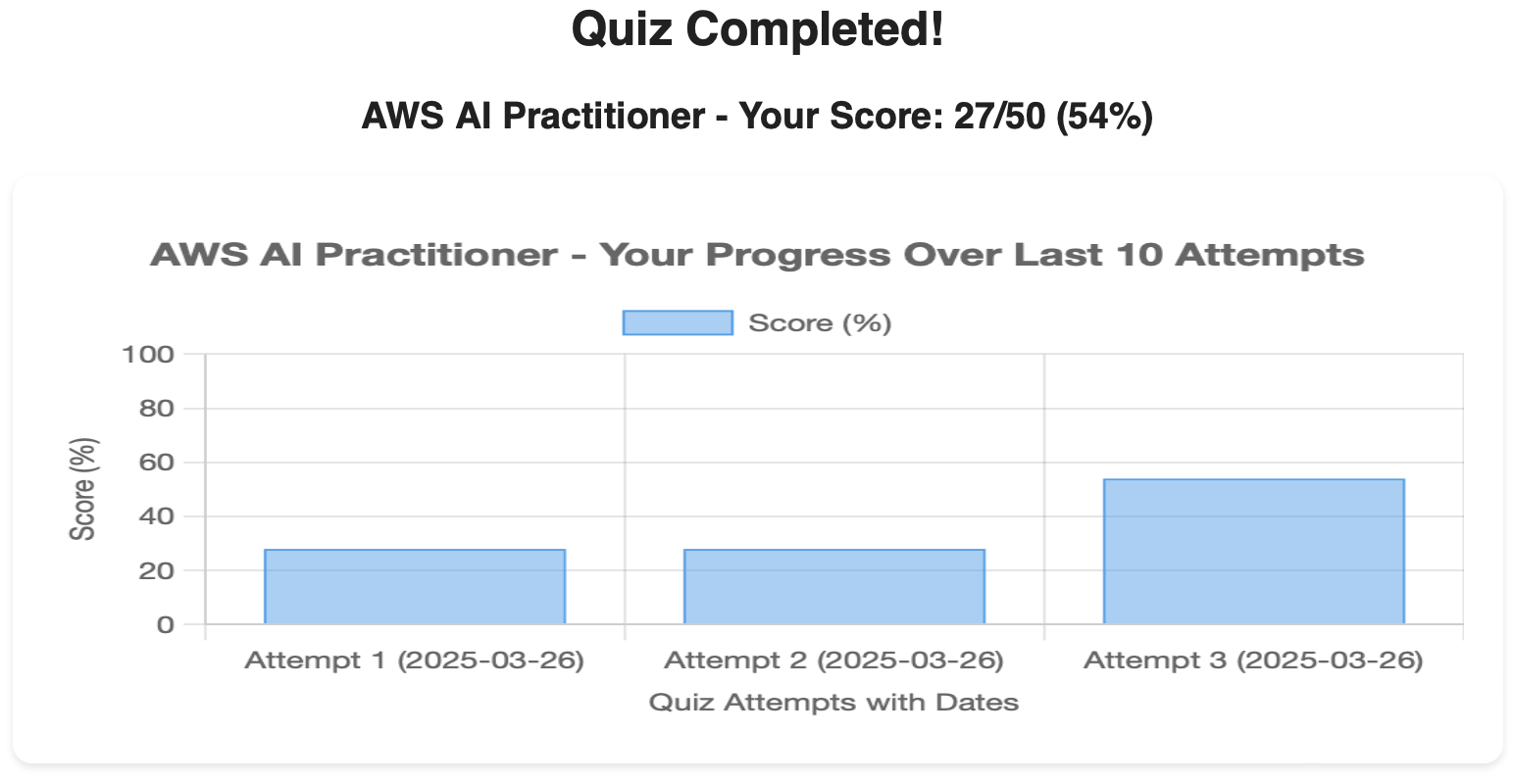

🤖 AWS AI Practitioner

This certification introduces the fundamentals of AI and machine learning on AWS, including key services and use cases. Djamgatech’s AI quizzes help users test their knowledge of AWS AI tools and concepts, ensuring they’re exam-ready. Achieving this certification can pave the way for roles like AI Specialist or ML Engineer, positioning users at the forefront of AI innovation.

💻 AWS Certified Developer Associate

Focused on application development on AWS, this certification covers topics like AWS SDKs, CI/CD pipelines, and serverless computing. Djamgatech’s AI quizzes provide hands-on practice, helping users solidify their coding and deployment skills. This certification can lead to roles like Cloud Developer or DevOps Engineer, offering opportunities to work on cutting-edge cloud projects.

🔄 AWS Certified DevOps Engineer

This certification emphasizes automation, CI/CD, and infrastructure as code on AWS. Djamgatech’s AI-powered quizzes simulate real-world DevOps challenges, ensuring users are well-prepared for the exam. Earning this certification can lead to high-demand roles like DevOps Engineer or Site Reliability Engineer, with a focus on optimizing cloud operations.

☁️ AWS Cloud Practitioner

Designed for beginners, this certification provides foundational knowledge of AWS services and cloud concepts. Djamgatech’s AI quizzes help users build confidence by testing their understanding of core AWS principles. This certification is a stepping stone to more advanced AWS roles, making it ideal for those starting their cloud journey.

📊 AWS Data Engineer Associate

This certification focuses on designing and implementing data solutions on AWS, including data lakes and ETL pipelines. Djamgatech’s AI quizzes help users practice data engineering concepts, ensuring they’re ready for the exam. Achieving this certification can lead to roles like Data Engineer or Big Data Architect, with opportunities to work on large-scale data projects.

🧠 AWS Machine Learning Engineer

This certification validates expertise in building, training, and deploying machine learning models on AWS. Djamgatech’s AI quizzes provide targeted practice on ML concepts and AWS tools, helping users master the material. Earning this certification can lead to roles like Machine Learning Engineer or Data Scientist, with high demand in AI-driven industries.

🏗️ AWS Solutions Architect

This advanced certification focuses on designing and deploying complex AWS architectures. Djamgatech’s AI quizzes simulate real-world design challenges, ensuring users are well-prepared. Achieving this certification can lead to senior roles like Cloud Architect or Technical Lead, with significant career advancement opportunities.

🌐 AWS Advanced Networking

This certification covers advanced networking concepts, including hybrid cloud environments and AWS networking services. Djamgatech’s AI quizzes help users test their knowledge of networking principles and AWS tools. Earning this certification can lead to roles like Network Architect.

☁️ AWS Cloud Practitioner

Designed for beginners, this certification provides foundational knowledge of AWS services and cloud concepts. Djamgatech’s AI quizzes help users build confidence by testing their understanding of core AWS principles. This certification is a stepping stone to more advanced AWS roles, making it ideal for those starting their cloud journey.

📊 AWS Data Engineer Associate

This certification focuses on designing and implementing data solutions on AWS, including data lakes and ETL pipelines. Djamgatech’s AI quizzes help users practice data engineering concepts, ensuring they’re ready for the exam. Achieving this certification can lead to roles like Data Engineer or Big Data Architect, with opportunities to work on large-scale data projects. Real AWS Practice Test 2024 and 2025

🧠 AWS Machine Learning Engineer

This certification validates expertise in building, training, and deploying machine learning models on AWS. Djamgatech’s AI quizzes provide targeted practice on ML concepts and AWS tools, helping users master the material. Earning this certification can lead to roles like Machine Learning Engineer or Data Scientist, with high demand in AI-driven industries.

🏗️ AWS Solutions Architect

This advanced certification focuses on designing and deploying complex AWS architectures. Djamgatech’s AI quizzes simulate real-world design challenges, ensuring users are well-prepared. Achieving this certification can lead to senior roles like Cloud Architect or Technical Lead, with significant career advancement opportunities.

🌐 AWS Advanced Networking

This certification covers advanced networking concepts, including hybrid cloud environments and AWS networking services. Djamgatech’s AI quizzes help users test their knowledge of networking principles and AWS tools. Earning this certification can lead to roles like Network Architect or Cloud Network Engineer, with a focus on designing and managing complex network infrastructures.

🔎 Azure AI Fundamentals

This certification introduces the basics of AI and machine learning on Microsoft Azure. Djamgatech’s AI quizzes provide practice on Azure AI tools and concepts, ensuring users are exam-ready. Achieving this certification can lead to roles like AI Developer or Data Scientist, with opportunities to work on innovative AI solutions.

📡 Azure Fabric Data Engineer Associate

This certification focuses on data engineering in Azure Fabric, including data integration and processing. Djamgatech’s AI quizzes help users master data engineering concepts, ensuring they’re prepared for the exam. Earning this certification can lead to roles like Data Engineer or Cloud Data Architect, with high demand in data-driven industries.

☁️ Azure Fundamentals

This certification provides an introduction to Microsoft Azure services and cloud concepts. Djamgatech’s AI quizzes help users build foundational knowledge, making it ideal for beginners. Achieving this certification can open doors to entry-level cloud roles, setting the stage for more advanced certifications.

📈 CAPM Certification

The CAPM certification is an entry-level project management credential, ideal for those starting their project management careers. Djamgatech’s AI quizzes help users practice project management concepts, ensuring they’re ready for the exam. Earning this certification can lead to roles like Project Coordinator or Junior Project Manager, providing a strong foundation for career growth.

🏥 CCMA Certification

The CCMA certification is designed for clinical medical assistants, covering essential healthcare skills. Djamgatech’s AI quizzes help users test their knowledge of medical procedures and patient care, ensuring they’re exam-ready. Achieving this certification can lead to roles like Clinical Medical Assistant or Patient Care Technician, with opportunities in the growing healthcare industry.

❤️ CCRN Certification

The CCRN certification is for critical care nurses, validating their expertise in caring for critically ill patients. Djamgatech’s AI quizzes provide practice on critical care concepts, ensuring users are well-prepared. Earning this certification can lead to advanced nursing roles, with opportunities for specialization and higher earning potential.

🛡️ Certified Ethical Hacker (CEH)

The CEH certification focuses on ethical hacking and penetration testing, teaching users how to identify and mitigate security vulnerabilities. Djamgatech’s AI quizzes simulate real-world hacking scenarios, ensuring users are exam-ready. Achieving this certification can lead to roles like Ethical Hacker or Security Analyst, with high demand in cybersecurity.

📈 CFP Certification

The CFP certification focuses on financial planning and personal wealth management. Djamgatech’s AI quizzes help users practice financial planning concepts, ensuring they’re ready for the exam. Earning this certification can lead to roles like Financial Planner or Wealth Manager, with opportunities to help clients achieve their financial goals.

🩺 CHDA Certification

The CHDA certification is for health data analysts, validating their expertise in managing and analyzing healthcare data. Djamgatech’s AI quizzes help users test their knowledge of health data concepts, ensuring they’re exam-ready. Achieving this certification can lead to roles like Health Data Analyst or Healthcare Consultant, with opportunities in the growing healthcare data field.

🔐 CISM Certification

The CISM certification focuses on information security management, teaching users how to design and manage security programs. Djamgatech’s AI quizzes provide practice on security management concepts, ensuring users are well-prepared. Earning this certification can lead to roles like Security Manager or IT Director, with high demand in cybersecurity leadership.

🔏 CISSP Certification Quiz with Answers

The CISSP certification is a globally recognized credential for cybersecurity professionals, focusing on risk management and security operations. Djamgatech’s AI quizzes help users internalize complex security concepts, ensuring they’re exam-ready. Achieving this certification can lead to roles like Security Consultant or CISO, with significant career advancement opportunities.

☸️ CKA Certification

The CKA certification validates expertise in Kubernetes administration, including cluster management and troubleshooting. Djamgatech’s AI quizzes provide hands-on practice, ensuring users are ready for the exam. Earning this certification can lead to roles like Kubernetes Administrator or Cloud Engineer, with high demand in containerized environments.

📊 CMA Certification

The CMA certification is for management accountants, covering financial planning, analysis, and control. Djamgatech’s AI quizzes help users practice accounting concepts, ensuring they’re exam-ready. Achieving this certification can lead to roles like Management Accountant or Financial Controller, with opportunities for career growth in finance.

🏥 CNA Certification

The CNA certification is for nursing assistants, validating their skills in patient care. Djamgatech’s AI quizzes help users test their knowledge of nursing concepts, ensuring they’re exam-ready. Earning this certification can lead to roles like Certified Nursing Assistant or Patient Care Technician, with opportunities in the healthcare industry.

🛡️ CompTIA CySA+

The CompTIA CySA+ certification focuses on cybersecurity analysis, teaching users how to detect and respond to security threats. Djamgatech’s AI quizzes simulate real-world scenarios, ensuring users are well-prepared. Achieving this certification can lead to roles like Cybersecurity Analyst or Threat Intelligence Analyst, with high demand in cybersecurity.

🔒 CompTIA Security+

The CompTIA Security+ certification covers IT security fundamentals, including network security and risk management. Djamgatech’s AI quizzes help users practice security concepts, ensuring they’re exam-ready. Earning this certification can lead to entry-level roles in IT security, providing a strong foundation for career growth.

📑 CPA Certification

The CPA certification is for accounting professionals, covering auditing, taxation, and financial reporting. Djamgatech’s AI quizzes provide practice on accounting concepts, ensuring users are ready for the exam. Achieving this certification can lead to roles like Certified Public Accountant or Financial Auditor, with high earning potential.

💉 CPC Certification

The CPC certification is for medical coders, validating their expertise in medical billing and coding. Djamgatech’s AI quizzes help users test their knowledge of coding concepts, ensuring they’re exam-ready. Earning this certification can lead to roles like Medical Coder or Billing Specialist, with opportunities in the healthcare industry.

💊 CPHT Certification

The CPHT certification is for pharmacy technicians, covering medication dispensing and pharmacy operations. Djamgatech’s AI quizzes help users practice pharmacy concepts, ensuring they’re exam-ready. Achieving this certification can lead to roles like Pharmacy Technician or Medication Safety Officer, with opportunities in the pharmaceutical industry.

📜 CSM Certification

The CSM certification is for Scrum Masters, validating their expertise in Agile project management. Djamgatech’s AI quizzes help users practice Agile concepts, ensuring they’re ready for the exam. Earning this certification can lead to roles like Scrum Master or Agile Coach, with high demand in project management.

🏦 CTP Certification

The CTP certification focuses on treasury management, including cash flow and risk management. Djamgatech’s AI quizzes help users practice treasury concepts, ensuring they’re exam-ready. Achieving this certification can lead to roles like Treasury Analyst or Cash Manager, with opportunities in corporate finance.

📋 Enrolled Agent Certification

The Enrolled Agent certification is for tax professionals, validating their expertise in tax preparation and representation. Djamgatech’s AI quizzes help users test their knowledge of tax concepts, ensuring they’re exam-ready. Earning this certification can lead to roles like Tax Consultant or Enrolled Agent, with opportunities in tax advisory.

📉 FRM Certification

The FRM certification focuses on financial risk management, teaching users how to identify and mitigate financial risks. Djamgatech’s AI quizzes provide practice on risk management concepts, ensuring users are well-prepared. Achieving this certification can lead to roles like Risk Manager or Financial Analyst, with high demand in finance.

☁️ Google Associate Cloud Engineer: Google Cloud certification FREE

This certification validates expertise in deploying and managing applications on Google Cloud. Djamgatech’s AI quizzes help users practice cloud engineering concepts, ensuring they’re exam-ready. Earning this certification can lead to roles like Cloud Engineer or DevOps Engineer, with opportunities in cloud computing.

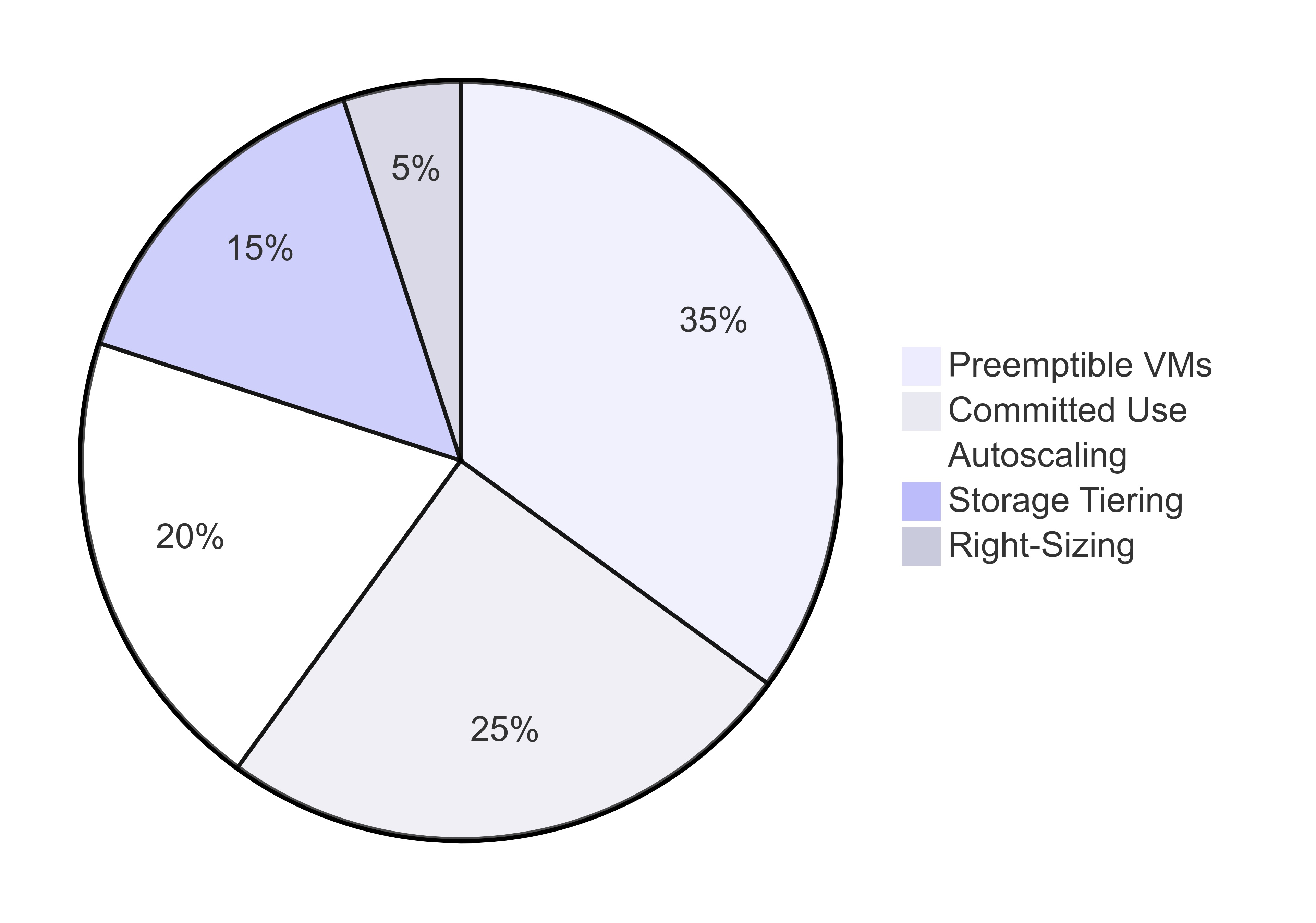

☁️ Google Professional Cloud Architect: Master GCP Design & Strategy (FREE Prep)

This advanced certification validates your ability to design, develop, and manage scalable, secure, and reliable Google Cloud solutions. Djamgatech’s AI-powered quizzes and case-study simulations help you master key concepts like infrastructure design, compliance, and cost optimization—ensuring you’re ready for real-world scenarios tested on the exam. Earn this credential to unlock high-demand roles like Cloud Architect or Solutions Engineer, with salaries averaging $150K+ in the fast-growing cloud industry.

🔐 Google Professional Cloud Security Engineer

This certification focuses on securing Google Cloud environments, including identity management and data protection. Djamgatech’s AI quizzes help users test their knowledge of cloud security concepts, ensuring they’re exam-ready. Achieving this certification can lead to roles like Cloud Security Engineer or Security Architect, with high demand in cybersecurity.

📊 Google Professional Data Engineer

This certification validates expertise in designing and implementing data solutions on Google Cloud. Djamgatech’s AI quizzes help users practice data engineering concepts, ensuring they’re ready for the exam. Earning this certification can lead to roles like Data Engineer or Big Data Architect, with opportunities in data-driven industries.

🧠 Google Professional Machine Learning Engineer

This certification focuses on building and deploying machine learning models on Google Cloud. Djamgatech’s AI quizzes provide targeted practice on ML concepts, ensuring users are exam-ready. Achieving this certification can lead to roles like Machine Learning Engineer or Data Scientist, with high demand in AI-driven industries.

⚙️ Lean Six Sigma Black Belt

The Lean Six Sigma Black Belt certification focuses on process optimization and business efficiency. Djamgatech’s AI quizzes help users practice Lean Six Sigma concepts, ensuring they’re exam-ready. Earning this certification can lead to roles like Process Improvement Manager or Business Consultant, with opportunities in operations management.

☁️ Microsoft Azure Administrator

This certification validates expertise in managing and deploying Microsoft Azure services. Djamgatech’s AI quizzes help users practice Azure administration concepts, ensuring they’re exam-ready. Achieving this certification can lead to roles like Cloud Administrator or IT Manager, with opportunities in cloud computing.

🔐 Microsoft Certified Azure Security Engineer Associate

This certification focuses on securing Microsoft Azure environments, including identity management and threat protection. Djamgatech’s AI quizzes help users test their knowledge of Azure security concepts, ensuring they’re exam-ready. Earning this certification can lead to roles like Cloud Security Engineer or Security Architect, with high demand in cybersecurity.

📊 PMP - Project Management Professional: Free PMP Quiz

The PMP certification is the gold standard for project managers, validating expertise in advanced project management strategies. Djamgatech’s AI quizzes help users practice project management concepts, ensuring they’re exam-ready. Achieving this certification can lead to roles like Project Manager or Program Manager, with significant career advancement opportunities.

📑 RHIT Certification

The RHIT certification is for health information technicians, validating their expertise in managing patient data. Djamgatech’s AI quizzes help users test their knowledge of health information concepts, ensuring they’re exam-ready. Earning this certification can lead to roles like Health Information Technician or Medical Records Manager, with opportunities in healthcare administration.

🔄 Six Sigma Green Belt

The Six Sigma Green Belt certification focuses on process improvement and quality control. Djamgatech’s AI quizzes help users practice Six Sigma concepts, ensuring they’re exam-ready. Achieving this certification can lead to roles like Quality Assurance Manager or Process Improvement Specialist, with opportunities in operations management.

🔵 Microsoft Dynamics 365 Customer Engagement

The Microsoft Dynamics 365 CE certification validates expertise in CRM solutions, including sales, customer service, and marketing automation. Djamgatech's AI quizzes help users master Power Platform integration and business process flows, ensuring exam readiness. Earning this certification can lead to roles like CRM Consultant or Business Applications Specialist, with opportunities in digital transformation projects.

💙 Salesforce Administrator

The Salesforce Administrator certification demonstrates proficiency in configuring and managing Salesforce CRM platforms. Djamgatech's AI quizzes provide targeted practice on security models, automation tools, and data management, preparing users for certification success. This credential opens doors to roles like Salesforce Admin or CRM Analyst, with high demand in cloud-based customer relationship management.

🔴 Oracle Cloud Infrastructure Architect

The OCI Architect certification validates skills in designing secure, high-performance solutions on Oracle Cloud. Djamgatech's AI quizzes cover compute services, autonomous databases, and network architecture, ensuring comprehensive exam preparation. Achieving this certification can lead to roles like Cloud Architect or Infrastructure Engineer, particularly in enterprises using Oracle technologies.

🟢 ITIL 4 Foundation

The ITIL 4 certification establishes foundational knowledge of IT service management best practices. Djamgatech's AI quizzes help users master the service value system and key ITIL practices, streamlining exam preparation. This globally-recognized credential qualifies professionals for IT Service Manager or Process Coordinator roles across all industries.

🔘 Cisco CCNA

The CCNA certification validates core networking skills including IP addressing, network access, and security fundamentals. Djamgatech's AI quizzes provide hands-on practice with routing protocols and Cisco technologies, building exam confidence. Certified professionals qualify for Network Technician or Systems Administrator roles, with pathways to advanced Cisco certifications.

🔮 JNCIP-ENT (Juniper Enterprise Routing & Switching)

The JNCIP-ENT certification validates advanced skills in Juniper enterprise networking, including EVPN/VXLAN, MPLS, and Junos automation. Djamgatech's AI quizzes help users master Junos-specific implementations and troubleshooting techniques. This certification is particularly valuable for telecom and service provider roles, with 85% of Tier 1 carriers using Juniper infrastructure. Professionals with JNCIP-ENT earn an average salary of $118,000 (Juniper Networks 2023 Survey) and qualify for roles like Network Architect or Senior Network Engineer in carrier environments.

☁️ AWS Certified Advanced Networking - Specialty

The AWS Advanced Networking certification focuses on hybrid cloud architectures, AWS networking services, and global infrastructure. Djamgatech's AI-powered quizzes provide scenario-based practice with Direct Connect, Route 53, and advanced VPC configurations. As the #1 most valuable cloud networking certification, it commands an average salary of $145,000 (Global Knowledge 2025) and boosts AWS Solutions Architect salaries by 27%. Certified professionals are recruited for Cloud Network Architect and Hybrid Infrastructure Specialist roles, especially in enterprises undergoing cloud migration.

🔒 Certified Cloud Security Professional (CCSP)

The CCSP certification validates expertise in cloud security architecture and operations. Djamgatech's AI quizzes help master cloud data security, identity management, and compliance frameworks. With 48% year-over-year growth in cloud security roles (ISC² 2023), CCSP holders earn $150,000 average salary and qualify for Cloud Security Architect or Cloud Risk Manager positions at AWS, Microsoft, and cloud-first enterprises.

⚔️ Offensive Security Certified Professional (OSCP)

The OSCP certification proves hands-on penetration testing skills through a grueling 24-hour practical exam. Djamgatech's scenario-based quizzes prepare users for real-world exploitation techniques. As the #1 most requested pentesting cert (Cyberseek.org), OSCP holders command $120,000+ salaries and are recruited by top security firms like CrowdStrike and Mandiant for Red Team and Vulnerability Assessment roles.

🛡️ GIAC Security Essentials (GSEC)

The GSEC certification validates practical security knowledge across domains like defense, cryptography, and incident response. Djamgatech's AI drills help reinforce hands-on security skills. With 72% of Fortune 500 requiring GIAC certs for security roles (SANS 2023), GSEC holders earn $110,000 average salaries and qualify for Security Operations Center (SOC) Analyst and Threat Hunter positions.

🏭 GIAC ICS Security

The GIAC ICS certification validates critical infrastructure protection skills for SCADA/OT systems. Djamgatech's AI quizzes cover industrial protocols, Purdue Model architecture, and ICS-specific threats. With 300% growth in OT cyberattacks (Dragos 2023), certified professionals earn $135,000 average salaries at energy firms, water utilities, and manufacturing plants implementing IIoT security.

🔧 DevSecOps Professional

The DevSecOps certification demonstrates CI/CD pipeline security expertise. Djamgatech's scenario-based drills cover SAST/DAST tools, Kubernetes hardening, and compliance-as-code. As 67% of enterprises now mandate DevSecOps skills (GitLab 2023), certified engineers command $145,000+ salaries at cloud-native companies and financial institutions automating security.

🤖 Certified AI Security Engineer (CAISE)

The CAISE certification validates protection of ML systems against adversarial attacks. Djamgatech's AI-powered quizzes train users on model hardening, data poisoning defense, and AI supply chain risks. With AI security jobs growing 450% (MITRE 2023), CAISE holders earn $160,000 premiums at AI-first companies and defense contractors securing generative AI deployments.

⚡ NERC CIP Compliance Professional

This certification validates expertise in securing North America's bulk electric systems against cyber-physical threats. Covers critical standards like CIP-002 (asset identification) and CIP-013 (supply chain risk). With $1M/day fines for non-compliance (FERC 2023), certified professionals command $125,000+ salaries at utilities and grid operators protecting 3,200+ critical assets.

🔐 ISO 27001 Lead Implementer

The global gold standard for Information Security Management Systems (ISMS), teaching risk assessment via 114 Annex A controls. Professionals learn to align security programs with GDPR, SOC 2, and cloud compliance requirements. With 48,000+ certified organizations worldwide (ISO 2023), holders earn 35% salary premiums in roles bridging technical and regulatory security demands.

🌀 Post-Quantum Cryptography Specialist

Certifies skills in quantum-resistant algorithms (CRYSTALS-Kyber/Dilithium) and QKD networks defending against "Harvest Now, Decrypt Later" attacks. Covers NIST standardization processes and migration strategies for classical crypto systems. As 20% of global enterprises will face quantum threats by 2028 (Gartner), certified experts earn $150,000+ securing government and financial systems with 25+ year data sensitivity.

🇪🇺 EU Cybersecurity Certification (EUCC)

ENISA's mandatory framework under NIS2 Directive, unifying 28 national standards for public sector contracts. Validates GDPR-aligned controls like "data protection by design" and cross-border incident reporting. With 72% of CERT-EU job postings requiring EUCC (2024), certified professionals earn €110,000+ securing critical infrastructure across the European single market.

⛓️ Certified Blockchain Security Professional (CBSP)

Validates expertise in securing blockchain networks against 51% attacks, smart contract vulnerabilities, and crypto wallet exploits. Covers Ethereum, Hyperledger, and zero-knowledge proof architectures. With blockchain attacks increasing 300% YoY (Chainalysis 2024), CBSP holders earn $145,000+ auditing DeFi protocols and enterprise blockchain deployments.

🚗 TÜV SÜD Automotive Cybersecurity Engineer

Certifies skills in securing connected vehicles against CAN bus injections, ECU exploits, and V2X communication threats. Aligns with UN R155 regulations mandating cyber protections for all new vehicles by 2025. Professionals earn $130,000+ at OEMs and Tier 1 suppliers implementing ISO/SAE 21434 standards.

🌀 Post-Quantum Cryptography Specialist

Certifies expertise in quantum-resistant algorithms (CRYSTALS-Kyber/Dilithium) and QKD networks defending against "Harvest Now, Decrypt Later" attacks. Covers NIST standardization processes and migration strategies for classical crypto systems. With 20% of enterprises facing quantum threats by 2025 (Gartner), certified experts earn $150,000+ securing government and financial systems with 25+ year data sensitivity.

🛰️ International Space Security Professional (ISSP)

Focuses on protecting satellites (GPS, Starlink) from jamming, spoofing, and laser attacks. Covers space-ground segment encryption and orbital cyber-physical systems. With 1,000+ new satellites launching annually (ESA 2024), ISSP-certified engineers command $160,000+ salaries in defense and commercial space sectors.

🏥 Certified Ethical Hacker for Medical Systems (CEHMS)

Specialized certification for penetration testing insulin pumps, MRI machines, and IoMT devices. Teaches FDA pre-market submission requirements and IEC 62304 compliance. 90% of hospitals now require this for device security roles (HIMSS 2024), with salaries reaching $140,000 at healthcare tech firms.

🤖 CIPM-AI (Certified Information Privacy Manager for AI)

Combines GDPR Article 22 with AI-specific regulations like EU AI Act and NIST AI RMF. Focuses on algorithmic impact assessments and synthetic data governance. 70% of Fortune 500 now seek this certification (IAPP 2024), with $155,000+ roles in AI ethics boards and compliance.

🔮 Quantum Network Engineer (QNE)

Certifies skills in deploying QKD networks and post-quantum VPNs using NIST-approved algorithms. Covers quantum repeater architectures and entanglement-based key distribution. With national quantum networks launching in 15+ countries (ITU 2024), QNEs earn $175,000+ at telecoms and defense contractors.

👓 Industrial Metaverse Security Specialist (IMVSEC)

Focuses on securing digital twin environments against asset hijacking, physics engine exploits, and AR/VR social engineering. Required for 45% of Industry 4.0 projects (McKinsey 2024), with $150,000+ salaries at manufacturing and energy firms building enterprise metaverses.

🏭 ISA/IEC 62443 Cyber-Physical Systems Architect (CPSA)

Advanced certification for securing OT/IoT convergence in smart cities and critical infrastructure. Teaches Purdue Model extensions for 5G-enabled edge devices. Mandatory for contractors working on U.S. EO 14028 compliance, with $165,000+ defense sector roles.

🚁 Drone Cybersecurity Expert (DCSE)

Validates skills in countering GPS spoofing, FLIR sensor attacks, and swarm command hijacking. Aligns with FAA Remote ID regulations and NATO STANAG 4586. 80% of commercial drone fleets now require DCSE-certified staff (Drone Industry Insights 2024), paying $135,000+.

🧠 Certified Neurosecurity Specialist (CNS)

Pioneering certification for securing brain-computer interfaces (BCIs) and neural implants against signal injection and memory alteration attacks. Covers FDA Class III device requirements for neurotechnology. With BCI adoption growing 400% annually (NeuroTechX 2024), CNS holders earn $180,000+ in medtech and defense.

💡 Unlock Your Potential with the Djamgatech App:

By achieving these certifications, you position yourself for higher-paying jobs, rapid career advancement, and valuable industry recognition.